If AI is to gain people’s trust, organisations should ensure they are able to account for the decisions that AI makes, and explain them to the people affected. Making AI interpretable can foster trust, enable control and make the results produced by machine learning more actionable. How can AI-based decisions therefore be explained?

Data goes in, answers come out. But how?

Artificial intelligence (AI) systems have become a vital part of everyday life. Whether they diagnose diseases or detect financial crime, their underlying algorithms can analyse vast amounts of data, detect patterns and use the results to make decisions. However, as their decision-making capability increases, AI models often grow more complex and become harder to understand. This can result in a sort of «black box», where data goes in and answers come out. Human beings are left puzzled about how any of those decisions and answers were reached.

Around 85% of CEOs worldwide believe that AI will significantly change the way they do business in the next five years, according to PwC’s latest Global CEO Survey. Yet, if AI is to gain people’s trust to carry out a wider range of tasks for an increasing number of organisations, leaders should ensure their AI models are interpretable. That is to say, they can account for the decisions that AI makes, and explain them to the people affected in order to provide timely and comprehensible explanations to stakeholders, whether they are regulators, auditors, customers, executives or data scientists. Understanding why a decision is made, is often as important as the accuracy of the result.

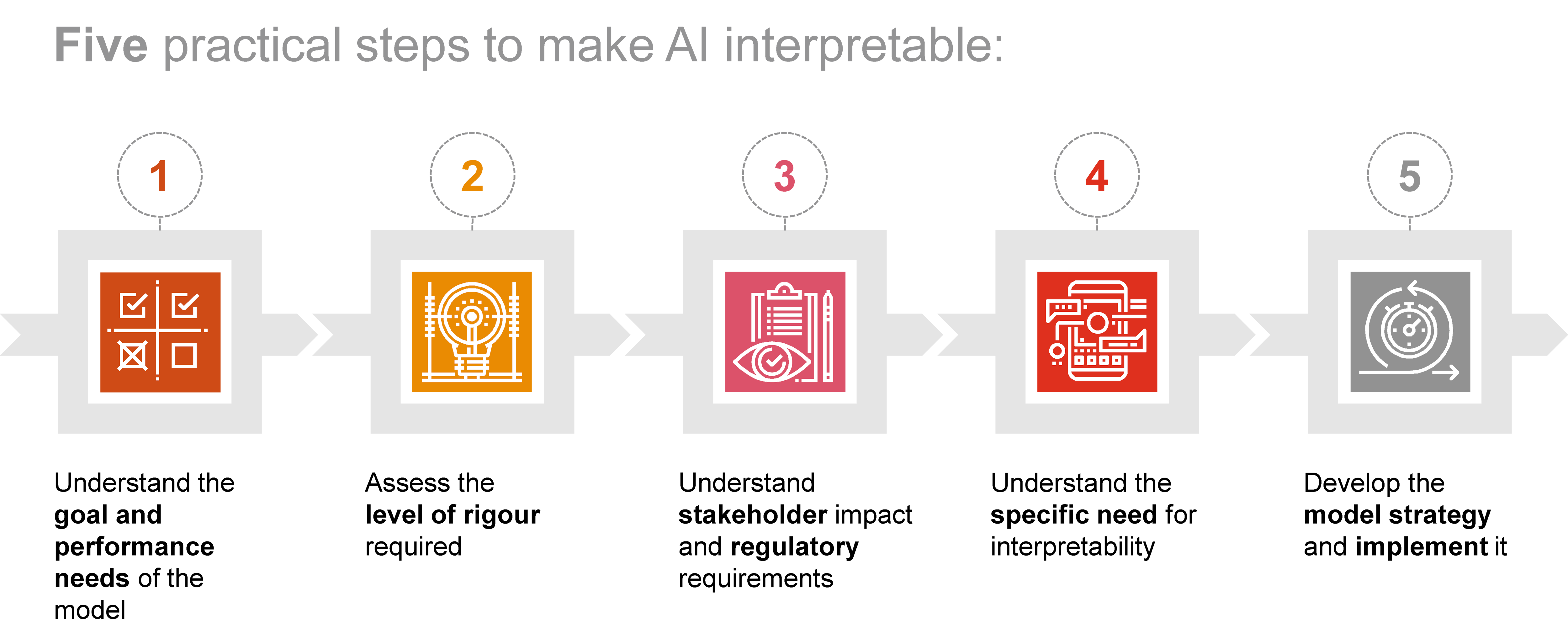

Organisations can make AI interpretable by implementing a five-step process: understanding the goal and performance needs of the model, assessing the level of rigour required, understanding stakeholder impact and regulatory requirements as well as the specific need for interpretability, and developing the model strategy and implementing it.

Step 1: Understand the goal and performance needs of the model

Executives should take responsibility for defining the goal, scope, and evaluating if it is the best solution to the problem at hand. Asking questions such as «Does machine learning solve the problem better than anything else?», «Is the cost of the AI system outweighed by the potential profit or cost savings?», «What performance do we need?» or «Do we have access to suitable data?» could help an organisation assess its options.

Once the decision is made to leverage AI, a meaningful and acceptable level of expected performance, articulated via metrics such as accuracy, should be clearly stated and established among stakeholders. For example, in the process of labelling emails as spam, even a software application with no AI capabilities can achieve an average of 50% accuracy, since there are only two choices. The question companies should consider is whether the degree of performance improvement is sufficiently great to justify the cost of developing an AI model, also viewed alongside the possible safety and control risks that using AI brings.

It is important to bear in mind that AI models are not perfect, and expecting 100% accuracy on tasks, particularly when they are complex in nature, is unrealistic. However, more critical decisions do require companies to set higher expected performance targets.

Both the expected level of performance and the complexity of data used for an AI model’s decision-making processes should guide the organisation in its evaluation and selection of possible machine learning models.

Step 2: Assess the level of rigour required

Not all AI-based tasks require interpretability. It is not necessary to understand the AI that sorts emails into a spam folder, for example. Mistakes are rare and easily rectified, so there is little value in being able to explain decisions. However, for an AI model that spots financial fraud, interpretability could be an essential part of the evidence-gathering process before a prosecution. The intended use of the model is therefore an important consideration in whether to add interpretability.

In PwC’s Responsible AI Toolkit, interpretability requirements are a function of rigour: whether the results of the AI’s decisions could harm humans, organisations, or others.

To determine the rigour required in a given use case, organisations should look at two factors:

- criticality, which implies to what extent human safety, money and the organisation’s reputation are at risk, and

- vulnerability, which refers to the likelihood that human operators working with the AI will distrust its decisions and override it and making it redundant.

The more critical and vulnerable the use case is, the more likely we need AI to be explainable and transparent.

In one example, PwC Switzerland and Germany worked with the NeuroTransData (NTD) network of physicians to develop an AI-based software to deliver personalised treatment to multiple sclerosis (MS) patients. The predictive healthcare with real-world evidence for neurological disorders is a software that is driven by an algorithm based on data collected from 25,000 patients for over more than 10 years.

Since the system is designed to help doctors recommend specific and personalised forms of treatment, it was clear that they would not adopt it, unless they could trust the outcome. They would also want to explain their reasoning to patients when suggesting a particular form of therapy. Relying on a black box AI system in this instance could be hazardous to the healthcare system morally and financially, and it could potentially undermine patient-doctor trust. Instead, having two support systems, one human and one AI, and both interpretable and explainable, created something much more reliable. There are fewer errors because if the two systems agree, then will automatically reinforce each other; and if they do not, it will be a sign that the process needs validating.

Step 3: Understand stakeholder impact and regulatory requirements

To enable the right degree of interpretability to satisfy all parties affected by the AI system, and comply with regulation that applies in the particular use case, an important action for organisations to take is to consider interpretability from the outset. Asking the following questions can help work towards interpretable systems:

- Who are the stakeholders and what information do they need?

- What constitutes a good or sufficient explanation?

- What information should be included in an interpretability report for each respective stakeholder?

- How much are the organisation and its stakeholders willing to compromise on the performance of AI models so as to accommodate interpretability?

For example, a bank customer may be interested in knowing why her application for a loan was declined, what needs to change for a successful application, and most importantly that she is not being discriminated against. On the other hand, an auditor or risk officer might be interested in whether the AI model is fair to a wider group, and how accurate it is. To answer both groups, the bank needs a variety of information about the AI process, from the preparation of the dataset to training and application steps. An organisation should be able to explain in a timely manner both how a model works holistically, as well as how an individual decision has been reached.

In addition, as the assessment of criticality described in step 2 shows, interpretability is imperative when the consequences of an AI system's actions are significant and can affect the physical or mental safety of end-users, or curtail a person’s rights and freedom.

Where mandated by law, such as the European Union’s General Data Protection Regulation (GDPR) on the «right to explanation», AI systems that rely on a customer's personal information should be able to explain how such information is used and to what ends. The Monetary Authority of Singapore (MAS) has also laid down a set of principles to promote fairness, ethics, accountability and transparency (FEAT) in the use of AI and data analytics in finance to foster greater confidence and trust in the technology.

In such cases interpretability is not a luxury addition to an AI system, it is simply a requirement.

Step 4: Understand the specific need for interpretability

Each stakeholder may have a different need for explainability and interpretability: the need for trust, in the case of the doctors and patients in the multiple sclerosis treatment project described above, or the ability to act on the results of AI, as in the maritime risk assessment or internal audit examples, described below.

In other instances, stakeholders may need AI systems to base their decisions on the factors that can be controlled by the human beings that interact with them.

Consider for example, a set of data consisting of variables affecting risk in maritime activity. Some variables can be controlled, such as the experience level of the crew onboard the ship, while others cannot, for example the economic or political situation in the trading region in which the ship operates. They are fed into a black box AI system and the output shows a ship has high risk but without knowing why the model made this decision, so how can ship owners or operators lower the risk factors in this situation?

With a black box, they cannot. However, organisations could instead deploy AI models that are easier to interpret – though perhaps not as powerful – such as decision trees, linear models, and optimised combinations of the two. These new models can then be compared with the black box results in terms of accuracy, and their interpretability will ultimately provide greater insight into the contribution of each factor to the overall risk assessment. Shipping companies could then address the risk factors over which they do have control.

In another example, an organisation decides to implement an AI system to identify unusual activity in its payroll process and chooses an unsupervised machine learning approach to perform this task. Upon putting the new tool to work, executives realise that the internal audit team cannot follow up on the suspicious activity reported by the system because they are unable to understand what triggered the reporting of these anomalies in the first place. In this case, adding an explainability layer to the model could resolve the issue and present the internal audit team with actionable results.

Step 5: Develop the model strategy and implement it

With the considerations in steps 1 to 4 in mind, organisations are better prepared to develop the interpretability strategy for their AI model and implement it.

If the particular AI system under consideration is a black box but interpretability is required by one or more stakeholders, then the organisation has a number of options: Leaders could decide to use a simpler, although sometimes less effective AI model instead; they could use other techniques to add interpretability; or find different ways to mitigate risk, such as adding decision-making processes carried out by humans to provide a «second opinion».

Starting with the first option, simple models such as a decision tree can be interpreted relatively easily by following the path from the top of the branches, the parents, to the bottom, the children. A deep neural network, with perhaps millions of degrees of freedom, might perform better but it is much harder to explain. When evaluating the implications of using an AI system, decision makers should weigh this trade-off between performance and interpretability.

Alternatively, organisations can continue with a complex AI model and add interpretability through frameworks such as the SHapley Additive exPlanation (SHAP) or Local Interpretable Model-Agnostic Explanations (LIME), which can explain AI decisions by tweaking inputs and observing the effect on results. In other words, they do not need to see inside the black box, but instead can infer its workings through a trial-and-error method.

Following the above five steps, interpretability can become a key performance dimension of responsible AI within organisations. It helps to support the other dimensions: bias and fairness, and robustness and security, while being underpinned by a foundation of end-to-end governance, and enable ethically sound decisions as well as in compliance with regulations.

Making AI interpretable can foster trust, enable control and make the results produced by machine learning more actionable.

This article is part of PwC’s Responsible AI initiative. Visit the Responsible AI website for further articles and information on PwC’s comprehensive suite of frameworks and toolkits, and to take the free PwC Responsible AI Diagnostic Survey.

Authors:

Christian Westermann, PwC Switzerland

Christian Blakely, PwC Switzerland

Tayebeh Razmi, PwC Switzerland

Bahar Sateli, PwC Canada

PwC refers to the PwC network and/or one or more of its member firms, each of which is a separate legal entity. Please see www.pwc.com/structure for further details