In collaboration with:

Johnny Chivers, EMEA AWS Technical Director

Aidan Caffrey, Distinguished Architect and UK FS Data and AI Engineering Lead

Organisations across industries are realising the transformational power of AI agents—streamlining operations and tackling complex business tasks at scale. However, many deployments encounter issues with managing state, ensuring scalability, and maintaining operational stability once complexity increases. This post outlines an event-driven framework that leverages Apache Kafka for messaging and BPMN-based process orchestration to deliver the resilience, efficiency, and compliance modern enterprises require.

Our executive playbook about agentic AI

At PwC, we are pioneering the integration of Agentic AI into financial services, helping businesses achieve smarter, more adaptive decision-making.

Key challenges of scaling AI agents

Maintaining a coherent system state

As AI agents multiply within a system, managing a single, reliable source of truth becomes difficult. Traditional, synchronous service-to-service integrations often add latency and complexity, creating performance bottlenecks that lead to partial outages or slowdowns. For enterprises dealing with financial transactions or regulated data (e.g., healthcare), consistent state management and minimal downtime are non-negotiable.

Overcoming scalability bottlenecks

Many AI-driven systems struggle to scale during peak demand. Direct service integrations can lock systems into rigid architectural constraints, limiting the ability to independently scale components. In addition, handling large volumes of event processing while preserving data consistency often proves a bottleneck. The result is a system that cannot seamlessly accommodate traffic spikes or seasonal surges.

Regulatory compliance and observability

In highly regulated sectors, every transaction must be auditable. AI-powered decisions require traceability and explainability. Maintaining observability—to detect model drift, potential biases, or anomalies—often adds extra overhead to the system, calling for robust logging and easily auditable event trails.

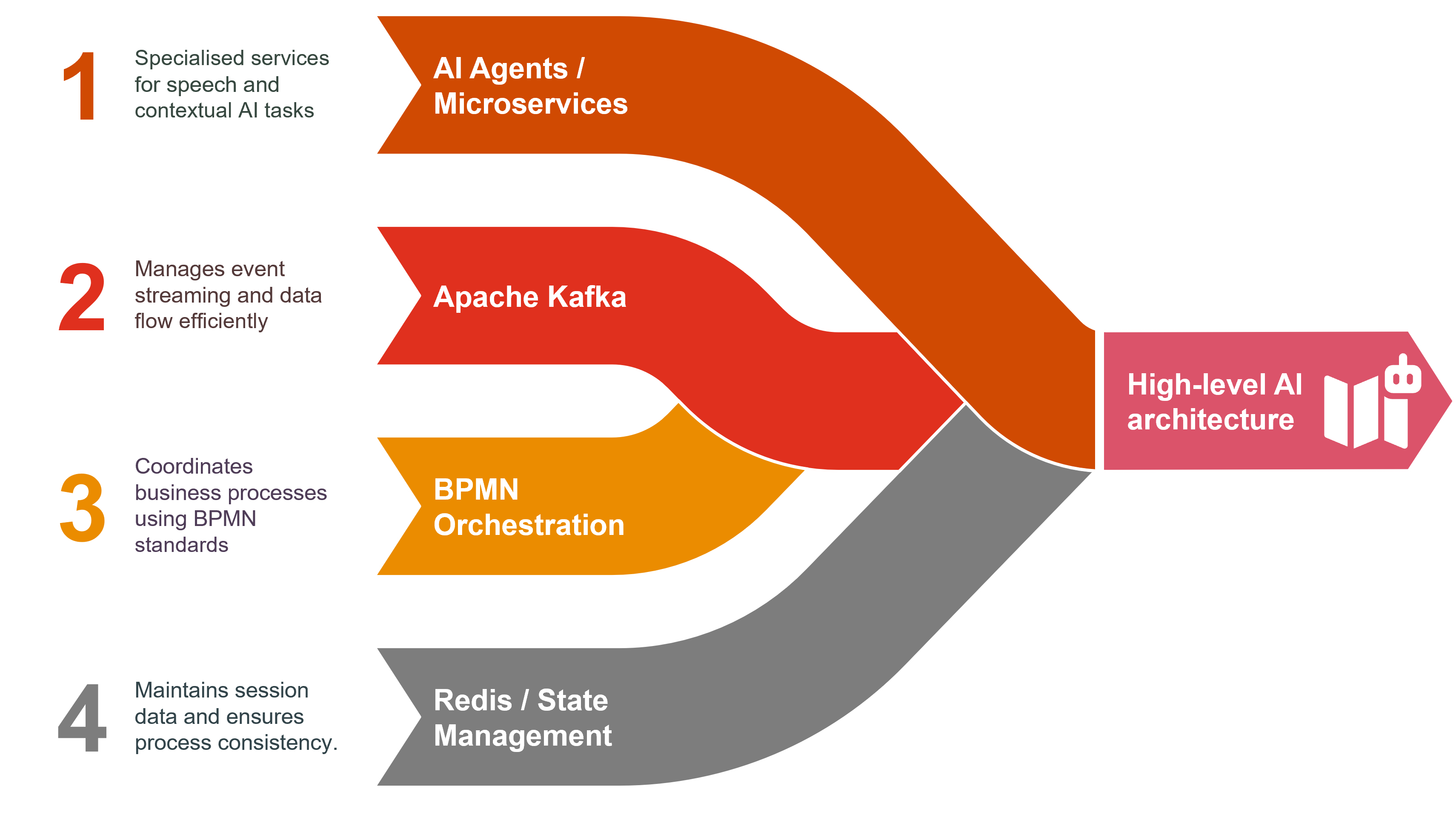

The event-driven orchestration approach

To address these challenges, we propose an event-driven process that couples:

- Apache Kafka for asynchronous messaging and event logging.

BPMN-based orchestration (e.g., Camunda 8 powered by Zeebe) for managing long-running workflows and decision trees.

Event Driven AI Agents Framework

Instead of direct calls between AI services, each event is published to Kafka, allowing agents and services to operate asynchronously. Key advantages:

Seamless scalability

Individual components (e.g., speech-to-text or fraud detection services) scale independently based on message throughput. If a sudden spike occurs (peak trading day, e-commerce flash sale), only the relevant components need to scale.

Resilience and fault tolerance

With Kafka’s event log, the system can replay events after downtime or disruptions. This is vital in regulated areas like finance and healthcare, where data loss is unacceptable.

Clear separation of concerns

BPMN-based orchestration handles the business process flow, while AI agents focus on analysis and decision-making. This modular design reduces complexity and improves maintainability.

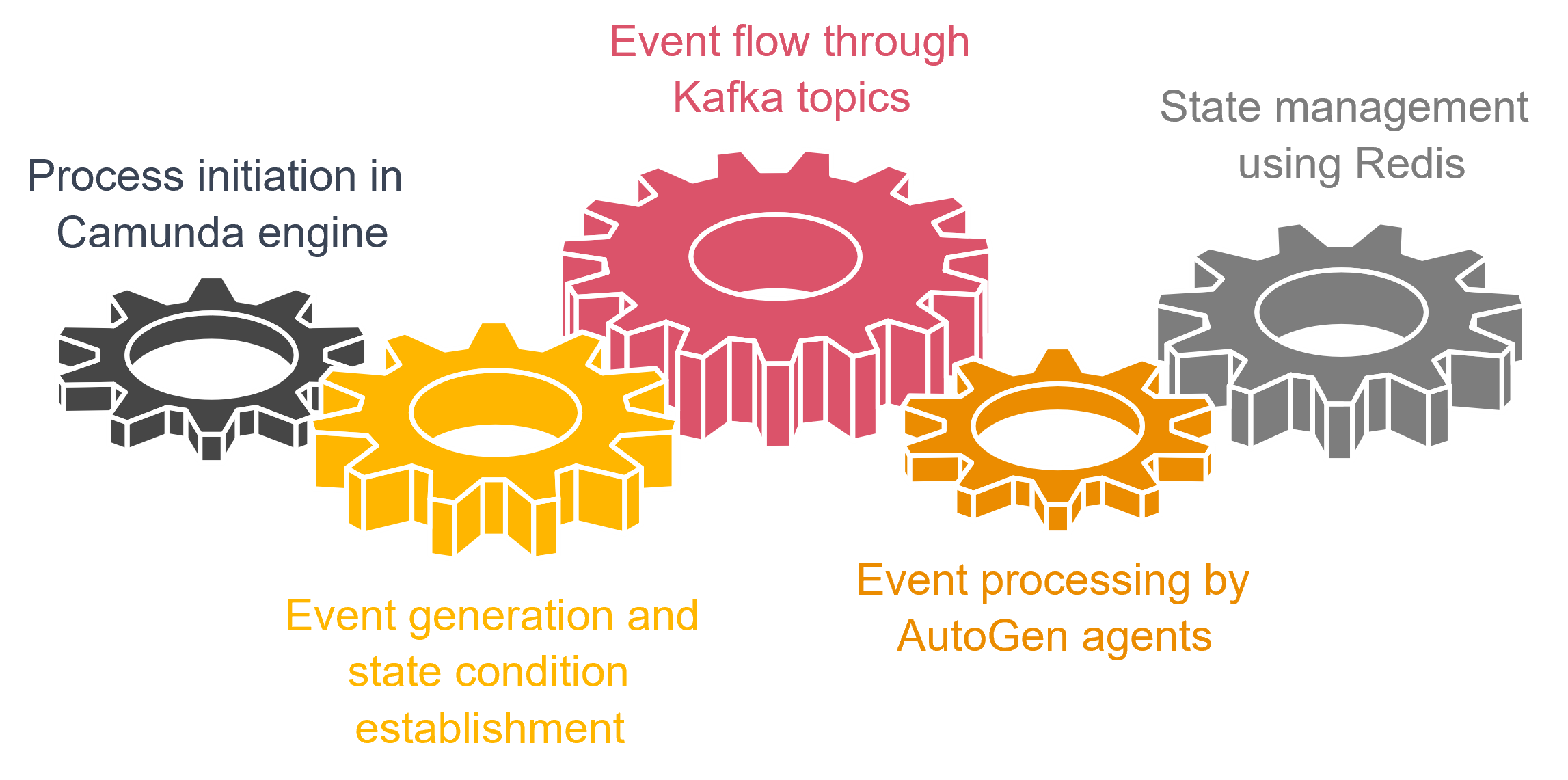

How BPMN and Kafka interact

A long-running business process is modeled in BPMN (for example, loan origination or complex voice-based customer support).

Each process step is mapped to events flowing through Kafka topics.

AI agents subscribe to relevant Kafka topics, process the events (e.g., run a machine learning model), and publish results back to Kafka.

The BPMN engine listens for specific events to move the workflow to the next stage—fully decoupled from the AI services themselves.

By using this loose coupling, enterprises can adapt individual AI tasks, business logic, or compliance steps without affecting the entire system.

Implementation Architecture Data Flow

Case studies: Practical implementations

Conversational AI at scale

A conversational AI application uses Azure Speech Services for real-time voice interactions. Audio data streams into Kafka, which triggers separate services for speech-to-text conversion, context-aware response generation, and text-to-speech transformation. Each result is published as an event, so microservices can react asynchronously. By decoupling each step, the system scales horizontally to thousands of concurrent sessions while preserving low latency.Direct voice-to-voice AI models

In advanced setups, AI models process voice inputs directly and generate synthetic voice outputs in real-time—bypassing text intermediaries. For example, frameworks like Google’s WaveNet, OpenAI’s Whisper, or Meta’s SeamlessM4T handle audio in and audio out.- Event-driven flow: Kafka topics manage each stage (voice input processing, intent recognition, response generation, voice synthesis) asynchronously.

- Scaling and latency: Edge computing, GPU acceleration, and WebRTC-based streaming can further reduce network delays.

- Context management: Redis or similar in-memory stores provide a “session memory,” so the AI agent can recall user context, enabling human-like back-and-forth conversations.

This design accommodates thousands of concurrent conversations with ultra-low latency—ideal for customer support, voice-based digital assistants, and hands-free IoT applications.

Regulatory compliance in banking

A global bank applies the same architectural principles for Basel III/IV reporting. Trading systems publish large volumes of data to Kafka in diverse formats. A BPMN workflow orchestrator (Camunda 8) coordinates AI agents that standardise and validate data. Redis ensures idempotent processing, preventing duplicate handling of events. Meanwhile, container orchestration (Kubernetes) auto-scales infrastructure based on trading volumes, ensuring cost optimisation. The entire pipeline is fully auditable—critical for meeting regulatory requirements.

Best practices for event-driven AI orchestration

- Design Kafka topics and partitions thoughtfully

- Align topic structures with logical business workflows (e.g., by user session or asset class).

- Over-partitioning can increase overhead and complexity, so balance throughput needs against operational costs.

- Separate process orchestration from AI task execution

- BPMN workflows handle business processes, while AI agents handle data analysis and decision-making.

- This modular approach simplifies maintenance, letting teams optimise each component independently.

- Incorporate observability and model monitoring

- Integrate AI observability platforms (e.g., Arize AI, Weights and Biases) to track model performance, detect drift, and generate compliance reports.

- Logging both process steps (via BPMN) and AI outputs (via Kafka event logs) ensures a comprehensive audit trail.

- Plan for cost and complexity

- Technologies like Redis Streams, Ray Serve, and advanced edge computing can significantly improve performance but may introduce higher operational overhead.

- Evaluate cloud vs. on-premise vs. hybrid approaches. Cost optimisation often requires balancing throughput demands, regulatory constraints, and real-time latency goals.

Implementation roadmap

Below is a summarised “Key Decisions” table that aligns each technological choice with a typical use case, plus considerations on cost and complexity:

| Technology | Role | Use case | Complexity and cost |

| Apache Kafka | Core event bus, log for replay | Nearly all event-driven AI workflows | Medium complexity, but vital for resilience |

| Kafka Streams | Real-time data transformation/aggregation | Pre-processing data before AI inference | Higher dev complexity; can reduce AI load |

| Camunda 8 (Zeebe) | BPMN process orchestration | Long-running workflows with branching logic | Modest overhead; simplifies business logic |

| Apache Flink | Low-latency stateful event processing | High-velocity data (e.g., fraud alerts) | Higher operational overhead, ultra-fast |

| Redis + Redis Streams | In-memory caching and session management | Session state for conversational AI, caching IDs | Medium complexity, fast and cost-effective |

| ClickHouse/PostgreSQL | Materialised views and auditing store | Compliance and historical analytics | Scales well, requires DB management overhead |

| Ray Serve | Distributed inference for AI agents | Serving thousands of concurrent AI requests | More advanced ops overhead, big scale payoff |

| Hugging Face Endpoints | Quick model deployment (API-based) | Fast iteration on ML models | Minimises infra setup but can be costlier |

| ONNX Runtime | Model optimisation for multi-platform use | Reducing compute costs on CPU/GPU/TPU | Some model conversion overhead, cost savings |

Conclusion

An event-driven architecture powered by Kafka and BPMN-based orchestration offers a scalable, resilient framework for enterprise AI. By separating business process logic from AI tasks, organisations can independently optimise each layer—delivering seamless scalability, fault tolerance, and compliance-ready audit trails. The addition of robust observability tools and careful planning around cost and complexity ensures that these architectures remain sustainable over time.

For enterprises committed to scalable, responsible AI, this roadmap provides a comprehensive blueprint. It addresses the intertwined requirements of real-time performance, fault tolerance, and regulatory compliance—helping safeguard both enterprise reputation and the experience of customers, regulators, and stakeholders alike.

Contact us

https://pages.pwc.ch/view-form?id=701Vl00000dxMuJIAU&embed=true&lang=en

Contact us

Partner and Forensic Services and Financial Crime Leader, Zurich, PwC Switzerland

+41 58 792 17 60